You must have appropriate permissions to list, create, edit and delete.Check kubernetes-client library’s version of your Spark environment, and its compatibility with your Kubernetes cluster’s version.We recommend 3 CPUs and 4g of memory to be able to start a simple Spark application with a single Be aware that the default minikube configuration is not enough for running Spark applications.We recommend using the latest release of minikube with the DNS addon enabled.You may set up a test cluster on your local machine using

If you do not already have a working Kubernetes cluster,

DOCKER FOR MAC KUBERNETES MOUNT LOCAL VOLUME DRIVER

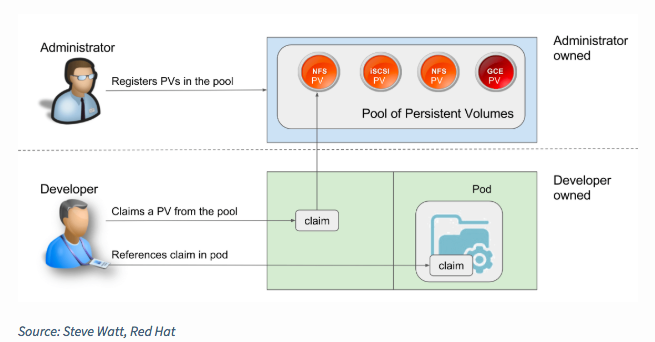

Volume MountsĪs described later in this document under Using Kubernetes Volumes Spark on K8S provides configuration options that allow for mounting certain volume types into the driver and executor pods. Cluster administrators should use Pod Security Policies if they wish to limit the users that pods may run as. Please bear in mind that this requires cooperation from your users and as such may not be a suitable solution for shared environments. This can be used to override the USER directives in the images themselves. Users building their own images with the provided docker-image-tool.sh script can use the -u option to specify the desired UID.Īlternatively the Pod Template feature can be used to add a Security Context with a runAsUser to the pods that Spark submits. The resulting UID should include the root group in its supplementary groups in order to be able to run the Spark executables. Security conscious deployments should consider providing custom images with USER directives specifying their desired unprivileged UID and GID.

This means that the resulting images will be running the Spark processes as this UID inside the container. Images built from the project provided Dockerfiles contain a default USER directive with a default UID of 185. Please see Spark Security and the specific security sections in this doc before running Spark. Or an untrusted network, it’s important to secure access to the cluster to prevent unauthorized applications When deploying a cluster that is open to the internet Security features like authentication are not enabled by default. Kubernetes scheduler that has been added to Spark. Spark can run on clusters managed by Kubernetes. Client Mode Executor Pod Garbage Collection.

0 kommentar(er)

0 kommentar(er)